Speech recognition has come a long way — from clunky voicemail dictation to real-time subtitles on live streams. In 2025, it’s baked into everything: smart assistants, call centers, translation apps, even legal transcriptions. But as the promises of “human-level accuracy” grow louder, so do the doubts.

For real business use cases, we’re still seeing painful misses. Ask any company running support calls, voicebots, or meeting transcriptions at scale — the tech often fails when it matters most.

Why? Because most ASR (Automatic Speech Recognition) models are trained and tested in clean, ideal conditions — not in noisy, multilingual, overlapping-speech chaos that defines the real world.

This article explores why traditional benchmarks often mislead, how businesses should evaluate ASR solutions, and why Lingvanex is at the forefront of the next generation of enterprise-ready, adaptive speech recognition systems. We ran a series of tests — across accents, environments, and languages — to compare the most popular speech recognition APIs on the market. The results? Surprising, revealing, and practical for any team building voice-driven products.

Why Accurate Evaluation of Speech Recognition Is Critically Important for Business

Speech recognition is no longer a futuristic gimmick. It's a core technology embedded in banking apps, video conferencing tools, legal software, and enterprise support systems. In 2025, companies don't just use speech recognition — they rely on it.

Poor ASR isn’t just an inconvenience — it's a financial risk. There’s a catch: when the transcription quality drops, everything else follows:

- Customer Service Impact: misrecognized words in support calls lead to unresolved queries and lower CSAT.

- Compliance Failures: inaccurate transcripts in regulated industries (finance, healthcare) can mean non-compliance.

- Data Loss: missed insights from meetings or interviews affect product development and strategy.

Let’s put it bluntly:

- If your ASR thinks “cancel my account” is “can sell my count,” that’s not just an error — it’s a customer lost.

- If your compliance department relies on transcripts that miss half the financial terms, you’re out of regulation.

- If your voicebot misunderstands half the Spanish it hears, your NPS score goes down the drain.

Speech errors aren’t academic. They’re expensive.

And modern environments aren't simple. Users speak fast. They have accents. They pause, mumble, switch languages mid-sentence. Sometimes there’s background noise, cross-talk, or imperfect mic quality. A truly useful engine must handle it all — consistently, and without manual intervention.

That’s why quality matters. Because a speech engine isn’t just parsing words — it’s parsing intent, instruction, compliance, emotion. If it gets the words wrong, the meaning falls apart. And when you're building products that depend on trust, there’s no room for guesswork.

Methodology for Speech Recognition System Performance Evaluation

To get a realistic picture of how today’s top speech recognition services perform, we designed a testing process that mirrors actual usage scenarios — not lab-perfect conditions. Our goal was simple: evaluate how well each system handles the complexity of everyday speech across multiple domains, devices, and languages.

Here’s how we did it.

Audio Samples

We curated a diverse set of audio recordings to reflect the kinds of input most businesses deal with. These included:

- Clean studio recordings – for baseline accuracy.

- Phone call excerpts – featuring narrow-band, low-bitrate audio Meeting-style dialogue – with overlapping speakers and variable pacing.

- Street and café recordings – with environmental noise and background chatter.

Languages Tested

To reflect global applicability, we included speech samples in the following languages: English, Simplified Chinese, Arabic, Portuguese, Spanish, French, German, Italian, Russian, Ukrainian, Kazakh, Polish

Evaluation Metrics

We focused on two core metrics:

- 1. Word Error Rate (WER). This metric represents the percentage of words that were incorrectly recognized. A lower WER indicates higher speech recognition accuracy. We included WER in our evaluation because it is a well-established industry standard, enabling a consistent comparison of overall system performance.

- 2. Character Error Rate (CER). Unlike WER, CER measures errors at the character level, providing a more granular view of recognition accuracy. This metric is especially critical in scenarios where every letter matters, such as handling technical terms or proper names. A lower CER demonstrates that the system can capture spoken input with greater precision.

You can learn more about the issues with modern methodologies in comparing speech recognition systems and how Lingvanex solves them in the article.

Results That Speak for Themselves: Lingvanex Testing

To evaluate speech recognition performance in realistic conditions, we ran a side-by-side test of Lingvanex versus other popular ASR services like Deepgram (Nova-2), AssemblyAI, Gladia, and Speechmatics. We selected the APIs that represent the current landscape of production-ready solutions. These platforms vary in architecture, deployment models, and strategic focus — but all are positioned as modern, scalable tools for voice-to-text processing.

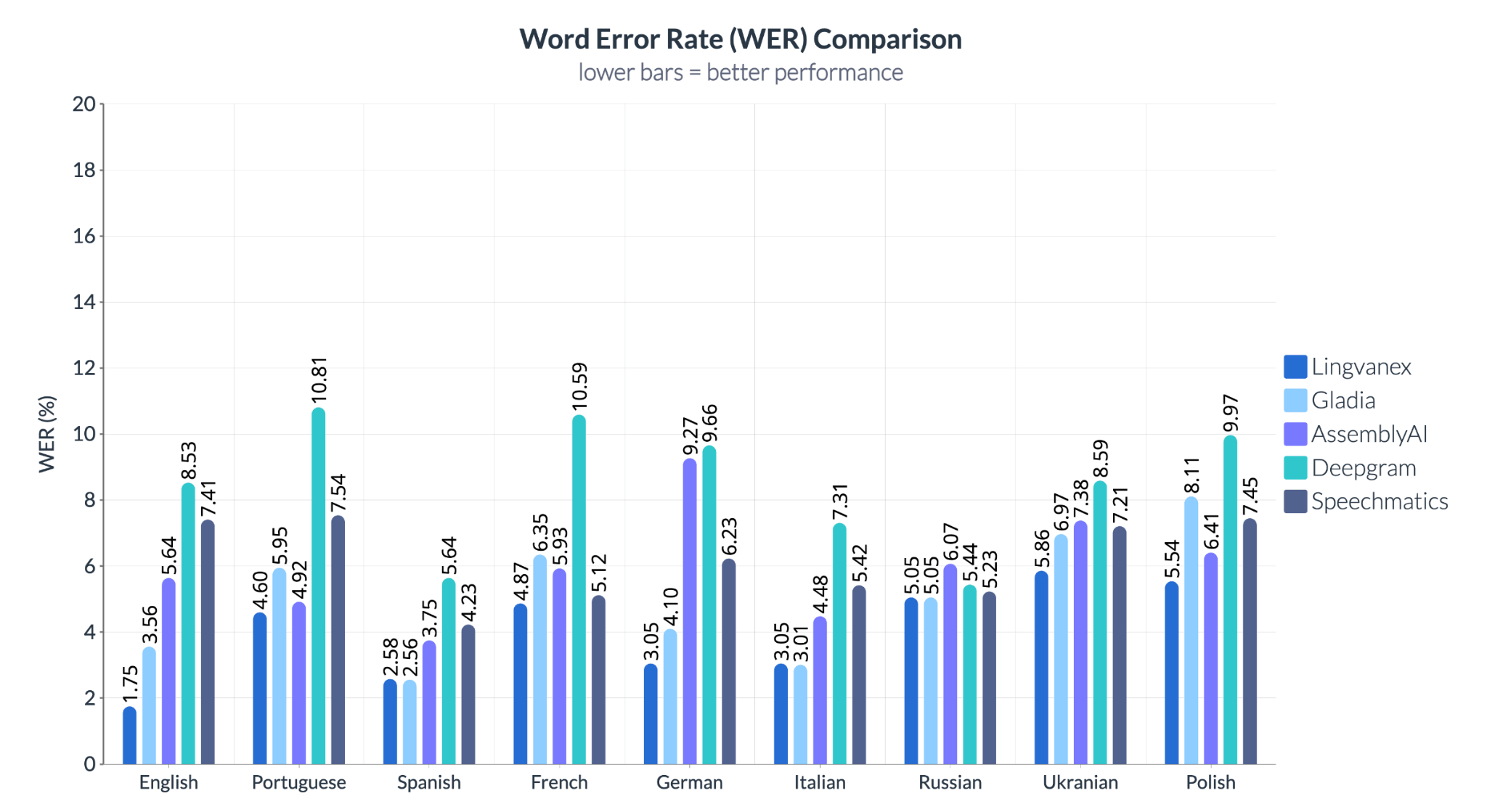

Results highlight significant variation in performance depending on language and provider. Across the board, Lingvanex consistently delivered the lowest error rates, outperforming competitors in English, German, and Spanish — three of the most commonly used business languages. Notably, Deepgram struggled with Portuguese and French, while Speechmatics showed inconsistent results across Slavic languages like Ukrainian and Polish.

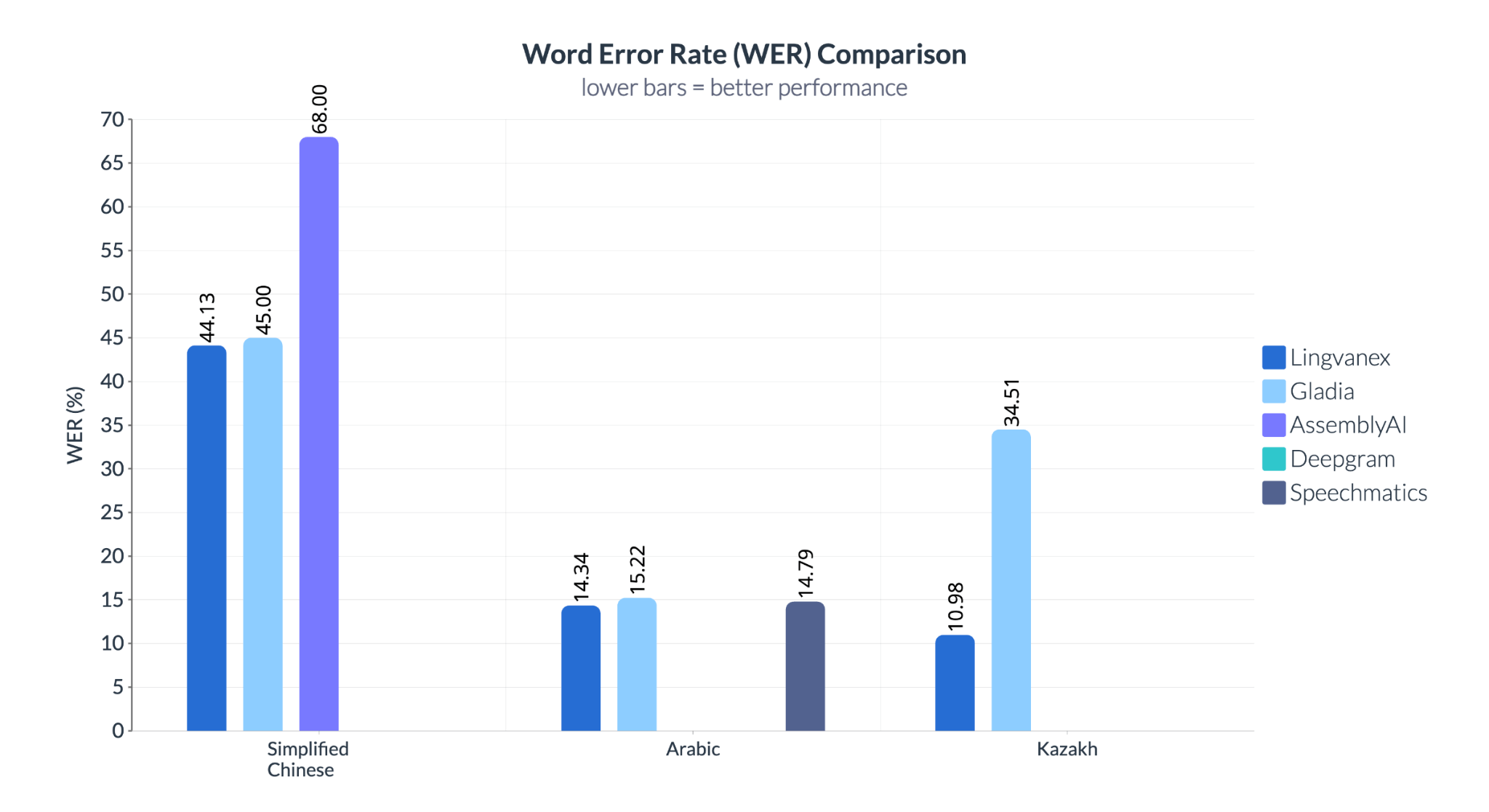

The gap becomes even more pronounced for Kazakh, where Lingvanex leads with 10.98% WER, while Gladia performs poorly at 34.51%, highlighting Lingvanex's multilingual adaptability. Conversely, for Simplified Chinese, Speechmatics falls behind with a staggering 68% WER, compared to 44.13% for Lingvanex.

Enterprise-ready speech recognition means more than just English accuracy. For global applications, systems must excel across diverse languages, accents, and noise levels. For any product targeting diverse markets, linguistic range isn’t optional — it’s mission-critical. If your system can’t handle Arabic fluently or falls apart on Chinese speech, your global ambitions hit a wall. Lingvanex stands out not just in theory — but where it actually counts: in real-world performance.

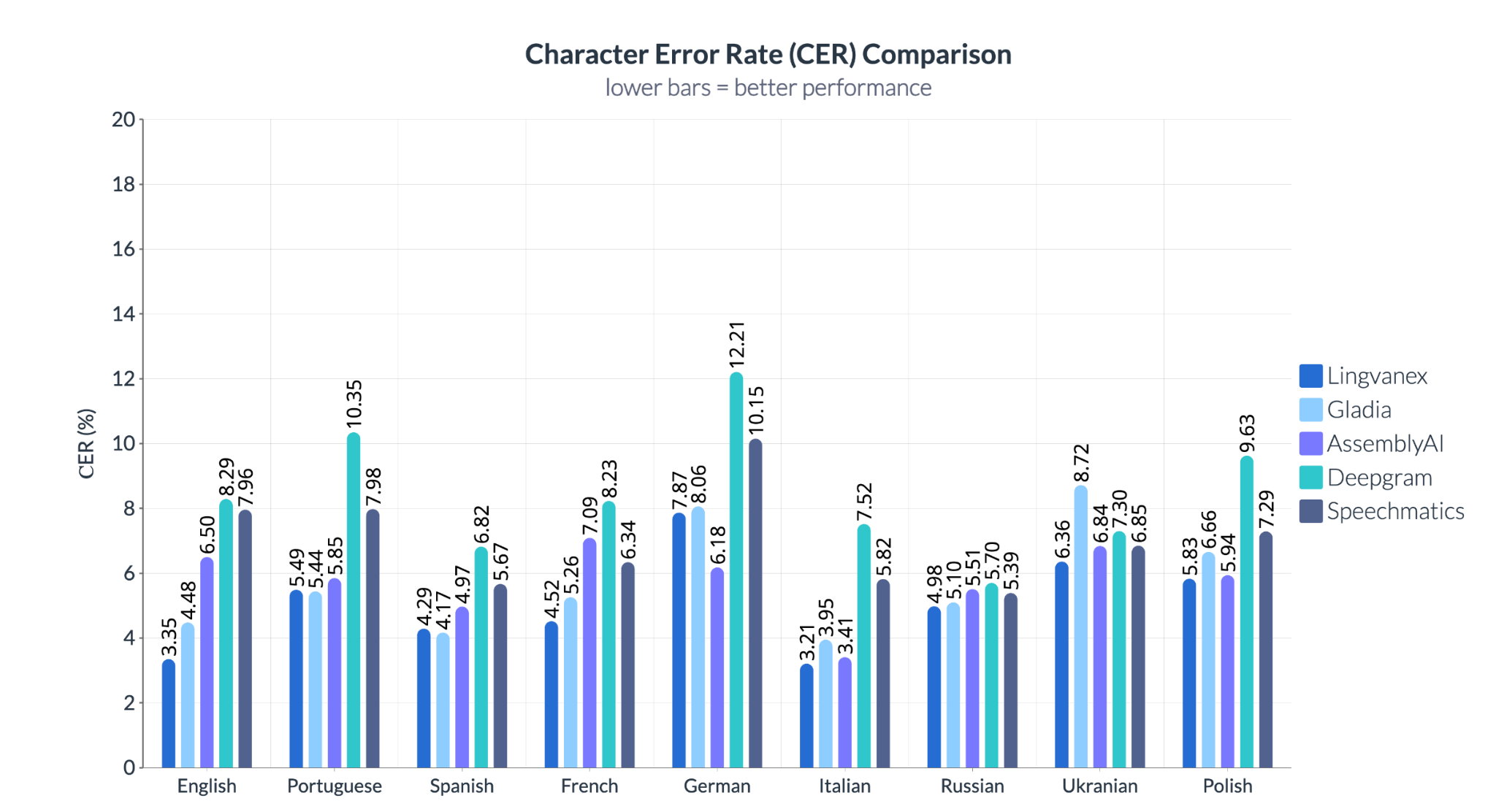

Lingvanex consistently delivers tighter character-level precision — especially in languages where detail loss can be costly, like German (6.18%) and English (3.35%). Deepgram shows the highest variance, peaking at over 12% CER in German, making it unreliable in technical or legal contexts. Speechmatics trails behind in English and Polish, while Gladia shows unpredictable performance — particularly weak in Kazakh and Slavic language handling.

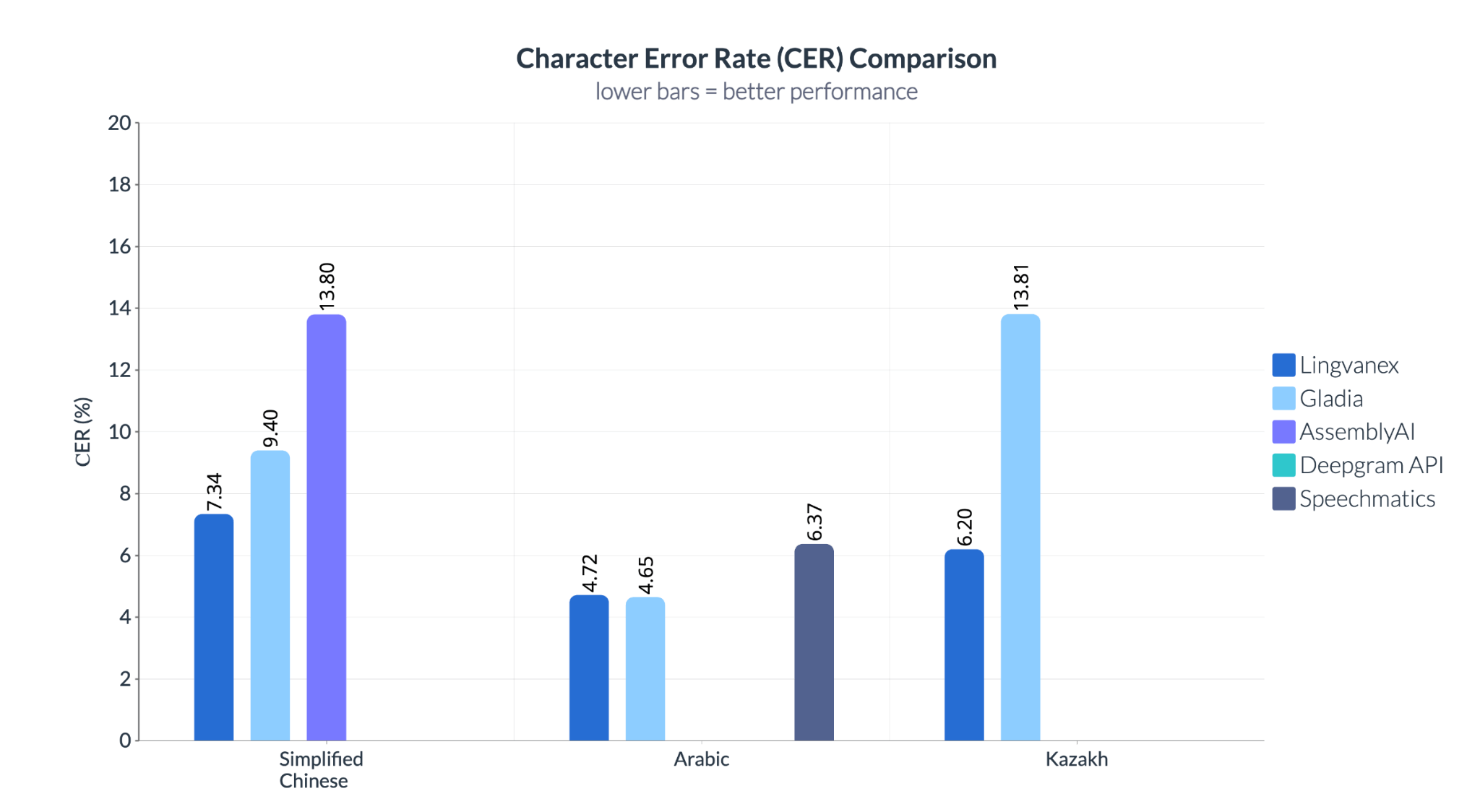

Lingvanex again leads across all three, showing the lowest CER in each case. In Chinese, AssemblyAI returns a staggering 13.8% error rate — nearly twice that of Lingvanex (7.34%). In Kazakh, Gladia’s 13.81% CER reveals a major gap in language support, while Lingvanex remains accurate even under limited-resource conditions. Unlike WER, which focuses on word-level accuracy, CER exposes finer transcription weaknesses — critical when names, commands, or compliance terms are at stake.

Across both Word Error Rate (WER) and Character Error Rate (CER), Lingvanex consistently shows:

- The lowest error rates across the board.

- Stable performance across diverse languages, from English and Spanish to Kazakh and Chinese.

- Reliable precision even in difficult scenarios — noisy input, uncommon languages, overlapping speech.

Lingvanex is the only system in this comparison that’s genuinely built for production — not just demos or English-only use. Its consistent performance across languages, metrics, and noise conditions shows:

It’s accurate. It’s robust. And it’s ready to scale — globally.

Lingvanex: Adaptive Solutions for Your Business

Unlike cloud-only APIs that treat STT as a siloed service, Lingvanex offers a full-stack solution: speech recognition, translation, and language routing — all customizable and deployable on your terms.

Lingvanex provides unique strengths as a package:

- Exceptional Flexibility and Customization. Lingvanex provides highly adaptable solutions, enabling businesses to tailor the system to their exact needs. This includes specialized model training for domain-specific jargon and compliance with strict security protocols.

- Lightning-Fast Data Processing. With Lingvanex, one minute of audio is processed in just 3.44 seconds, drastically outperforming most market alternatives. This speed translates into major operational efficiency gains.

- Boost in Workforce Productivity. By automating speech-to-text tasks, Lingvanex minimizes the need for manual transcription. This allows employees to focus on higher-value activities, dramatically increasing overall productivity.

- Superior Customer Interaction. Thanks to advanced recognition capabilities, Lingvanex accurately understands diverse accents, dialects, and even conversations with multiple speakers in noisy backgrounds. This leads to smoother, more satisfying interactions with customers globally.

- Significant Cost Reduction. The system’s remarkable speed and accuracy sharply cut expenses associated with outsourcing transcription services and manual audio processing, making it a cost-effective choice for businesses.

- Effortless Integration. Lingvanex integrates seamlessly into your existing infrastructure via its robust API and SDK offerings. This ensures quick deployment without the need for costly development efforts.

- Broad Format Compatibility. Supporting an extensive array of audio file formats — including WAV, MP3, OGG, and FLV — Lingvanex guarantees maximum compatibility with your current data sources.

- Enterprise-Grade Data Security. For organizations handling sensitive information, Lingvanex delivers secure on-premise deployment options, ensuring strict adherence to data protection standards and compliance regulations.

Conclusion: Lingvanex — Your Partner in Global Voice Infrastructure

As voice becomes a critical interface for global business, it's clear that:

- Legacy ASR models can’t handle domain-specific complexity

- Evaluation must go beyond simple word errors

- Adaptability, security, and customization are essential

Lingvanex combines precision, performance, and flexibility to meet the needs of enterprises across industries — with unmatched support for multilingual and real-world scenarios.

Whether you're building a multilingual voicebot, transcribing legal evidence, or analyzing customer support calls, Lingvanex gives you the tools to deliver accurate, secure, and scalable speech recognition.