In today’s fast-paced digital world, subtitles have become an essential part of how we create and share content. From videos on social media to corporate meetings and educational lectures, subtitles make it easier for people to understand and engage with information. They help break language barriers, improve accessibility for those with hearing impairments, and ensure content can be enjoyed in various settings. This article explores how subtitle-generating tools work, the challenges they face, and the many ways they are transforming industries. Additionally, we’ll take a closer look at Lingvanex, a cutting-edge solution that offers businesses secure, customizable, and efficient subtitle generation with robust language support and seamless integration into workflows.

Core Technologies Behind Auto Subtitle Generators

Auto subtitle generators rely on cutting-edge technologies, including Automatic Speech Recognition (ASR), Natural Language Processing (NLP), and Timing Synchronization. Together, these innovations enable accurate and efficient subtitle generation.

Automatic Recognition Technology (ASR)

At the heart of auto subtitle generators lies ASR technology, which translates spoken language into written text.

ASR systems are powered by three primary components:

- Acoustic Models. These models analyze audio signals and identify speech patterns, distinguishing them from background noise.

- Language Models. These models predict likely word sequences, improving the system's ability to transcribe speech accurately, even in challenging conditions.

- Neural Networks. Using machine learning, neural networks train ASR systems to recognize different accents, dialects, and speech variations.

Natural Language Processing (NLP)

NLP plays a crucial role in refining the output of ASR systems. While ASR converts speech into text, NLP enhances its quality by understanding the context and structure of language.

Key contributions of NLP include:

- Contextual Understanding. By analyzing the meaning of sentences, NLP minimizes errors in transcription, such as confusing homophones ("there" vs. "their").

- Handling Accents and Slang. NLP algorithms adapt to variations in speech, ensuring accurate transcription even in informal or regional language.

- Multilingual Support. Advanced NLP systems enable subtitle generation in multiple languages, catering to global audiences.

Timing Synchronization

Generating accurate subtitles requires precise alignment between text and audio. Timing synchronization involves segmenting audio into smaller chunks and matching each segment with its corresponding text. Techniques like forced alignment use acoustic models to map text to audio timestamps. These timestamps ensure that subtitles appear on-screen in real-time, enhancing the viewer's experience.

Key Steps in the Subtitle Generation Process

The process of creating auto subtitles involves several key stages, from inputting audio to refining the final text. Each step is designed to ensure accuracy, readability, and synchronization.

- 1. Audio Input. The process begins with the audio input, which can be a pre-recorded file (e.g., MP3, WAV) or live audio from events or broadcasts. Pre-recorded audio allows for more accurate processing since it can be analyzed multiple times. In contrast, live audio requires real-time transcription, which is more challenging due to time constraints and potential background noise.

- 2. Transcription. The next step is transcription, where ASR systems convert spoken language into text. Despite advancements in ASR, challenges like overlapping voices, unclear pronunciation, and noisy environments can hinder accuracy. High-quality audio recordings with minimal background noise typically yield the best results.

- 3. Timing and Segmentation. Once the text is transcribed, it is divided into smaller, readable chunks. This step, known as segmentation, ensures that subtitles are concise and synchronized with speech. Each chunk is aligned with audio timestamps to maintain seamless timing, preventing delays or mismatches that could disrupt the viewer's experience.

- 4. Editing and Refinement. The final step involves refining the subtitles to ensure accuracy and readability. While auto subtitle generators produce raw transcriptions, manual review is often necessary to correct errors, improve grammar, and adjust punctuation. Many tools also offer auto-correction features, streamlining the editing process.

Applications of Auto Subtitle Generators

Auto subtitle generators are revolutionizing various industries by enhancing content accessibility and expanding audience reach. Their applications span content creation, accessibility, globalization, and professional or educational use, making them an indispensable tool in today’s digital age.

In content creation, platforms like YouTube and social media heavily rely on subtitles to engage viewers and boost visibility. Subtitles make videos more inclusive, ensuring that even users who cannot play audio can fully enjoy the content.

From an accessibility perspective, subtitles are essential for individuals with hearing impairments, enabling them to access audiovisual content effortlessly. Accurate and synchronized subtitles promote inclusivity and equal access to information.

When it comes to globalization, subtitles help break language barriers, allowing content to reach international audiences. Multilingual subtitles enable creators to connect with diverse linguistic communities, fostering global engagement and understanding.

In corporate and educational settings, auto subtitle generators are invaluable tools. They streamline the transcription of meetings, webinars, and lectures, providing accurate records while improving accessibility for remote participants.

By making content more inclusive, accessible, and globally relevant, auto subtitle generators are transforming the way information is shared and consumed across industries.

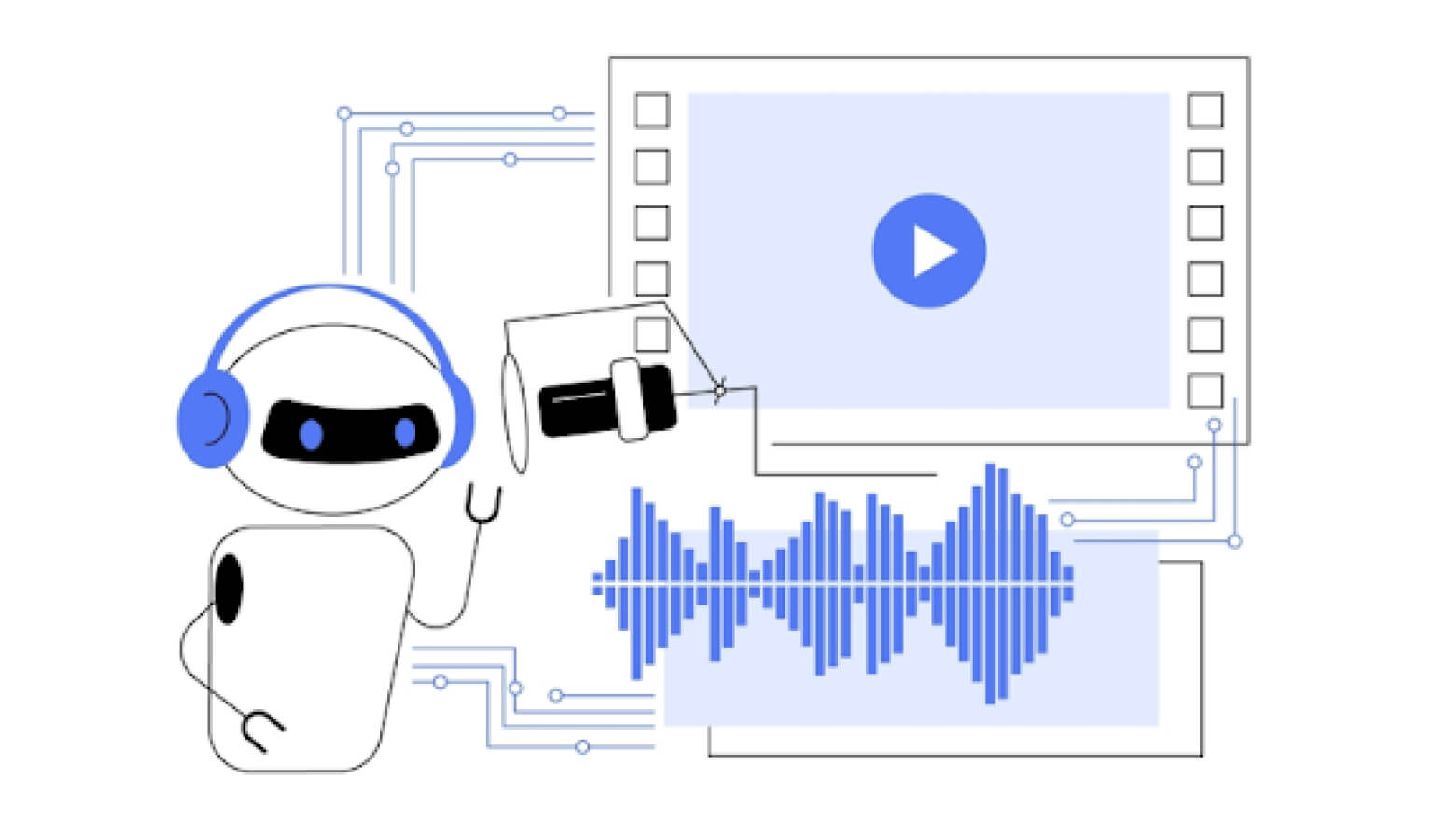

Lingvanex On-premise Speech Recognition - Your Trusted Partner

Lingvanex On-premise Speech Recognition refers to a technology that allows organizations to process and analyze spoken language locally, using their own servers rather than relying on cloud-based solutions. Lingvanex offers an on-premise speech recognition system designed to meet the specific needs of enterprises, providing a robust and secure way to handle speech data.

Key Features of Lingvanex On-Premise Speech Recognition:

- Wide Language Support. The Lingvanex system supports 91 languages, enabling organizations to transcribe and translate spoken content across diverse linguistic needs.

- Data Privacy and Security. For companies dealing with sensitive information, Lingvanex offers on-premises solutions that ensure full compliance with data protection regulations. Organizations can process sensitive documents offline, minimizing the risk of data exposure since no information is transmitted outside the company’s infrastructure.

- Unlimited Transcription. Organizations can enjoy unlimited transcription capabilities for a fixed monthly price, starting at €400. This pricing structure allows for extensive use without incurring additional costs based on volume.

- Flexibility and Customization. We provide customized options to tailor the system to meet unique enterprise requirements, including the ability to customize models for industry terminology and security protocols.

- Reduced Processing Time. Lingvanex dramatically speeds up audio data processing, processing one minute of audio in just 3.44 seconds - significantly faster than many competing solutions.

- Cost Savings on Data Processing. Lingvanex's fast processing speed and high accuracy reduce the costs associated with outsourcing transcription and other manual voice data processing tasks.

- Seamless Integration into Business Processes. Lingvanex integrates seamlessly with existing systems via APIs and SDKs, enabling rapid implementation without the need for extensive development or modification.

- Support for Multiple Data Formats. Lingvanex is compatible with a variety of audio formats, including common ones such as WAV and MP3, as well as more specialized formats such as OGG and FLV.

Conclusion

Auto subtitle generators have revolutionized the way content is created, accessed, and distributed. By leveraging advanced technologies like Automatic Speech Recognition (ASR), Natural Language Processing (NLP), and timing synchronization, these systems enable the efficient creation of accurate and synchronized subtitles. The applications of auto subtitle generators span across content creation, accessibility, globalization, and professional environments. They enhance inclusivity by providing equal access to audiovisual content for hearing-impaired users and allow creators to reach multilingual audiences. Tools like Lingvanex further expand the potential by offering customizable, secure, and cost-effective solutions with robust language support and seamless integration into existing workflows.